Q: Can you tell us what you are working on at the moment?

A: "Together with my wonderful Stanford research lab and collaborators, I work to create algorithms that can quickly and efficiently learn to make good decisions given uncertainty. Such ideas are the foundation of some of the largest breakthroughs in AI over the last decade, such as computers beating the world’s best board game Go players. They also have an incredible potential to help us make better decisions that could help people, such as in education or healthcare. Those settings are often complicated – it’s hard to use statistical models to capture human behavior, the data is often limited, and there are frequently multiple objectives that need to be maximized simultaneously. This introduces a lot of exciting technical challenges, and those are the focus of my research group."

Q: What are you passionate about?

A: "There’s often a significant gap between the algorithms and ideas being used by the tech giants – like Google, Facebook and Amazon – and those being used in the non-profit, public service sectors. For example, contextual bandit algorithms or reinforcement learning algorithms are used to optimize websites, or maximize video view times. But it is far less common to see these algorithms being used to efficiently learn the best personalization to support things like prenatal care outcomes, remote child literacy activities, active civic engagement, or online physical therapy. For such areas, I increasingly think there’s a huge opportunity to leverage ideas from AI, economics and statistics to more quickly figure out what works best. I’m really excited about this potential, and I am starting to work with collaborators and organizations to do this. My hope is that we can make better use of limited resources, and amplify the incredible pro-society impact of many organizations."

Q: Are you frightened or excited by AI?

A: "I am in awe of some of the incredible progress of artificial intelligence, especially over the last decade. I also think it is essential to be aware of its potential to be used for harm, either intentionally or through applying it without careful consideration of its potential negative impacts. I very much support the increasing efforts in the AI and machine learning research community to build in structures to train and encourage researchers to reflect on these potential effects, such as through short statements at the end of research papers, or the ethics and society review required for internal funding proposals that is led by some of my colleagues. I hope such efforts, and thoughtful regulation, will help maximize the positive benefits of AI."

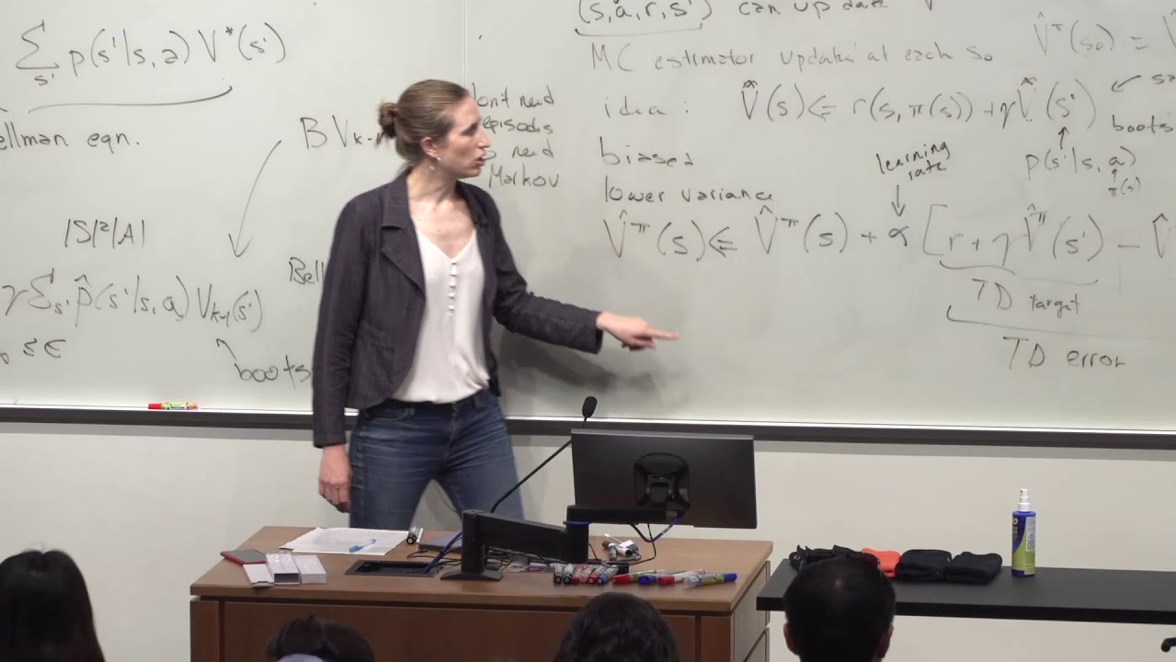

Emma Brunskill (Washington & Magdalen 2001) is a tenured associate professor in the Computer Science Department at Stanford University. Her goal is to create AI systems that learn from limited data to robustly make good decisions, motivated by applications to healthcare and education.